What Is Technical SEO?

Technical SEO refers to the process of optimizing of a website to help search engines like Google, Bing to crawl, and index its webpages. This includes not only technical improvements but also UX improvements. Making website faster, more crawlable and understandable are the pillars of technical SEO.

Here are some of the key aspects of technical SEO:

Website Crawlability

Website Indexing

Website Content Optimization

Website Performance

Mobile Optimization

Website Security

Why Is Technical SEO Important?

Your site may be still suffering from low rankings even if you have the most thorough, helpful and well-written piece of content among the competition. Googlebots crawl, index and rank web pages on many factors and your content cannot attract anybody without a strong technical SEO foundation.

Technical SEO is important for several reasons:

- It helps search engines understand and index your website. Search engines use bots to crawl the web and index websites. If your website is not technically sound, search engines may have trouble accessing and understanding your pages, which can result in lower rankings in search results.

- It improves the user experience of your website. A technically sound website is fast, easy to navigate, and mobile-friendly. This provides a good user experience for your visitors, which can lead to increased engagement, conversions, and sales.

- It helps you avoid penalties from search engines. Search engines have certain guidelines that websites must follow. If your website violates these guidelines, you may be penalized and your rankings may drop. Technical SEO helps you stay compliant with search engine guidelines and avoid these penalties.

Here are some specific examples of how technical SEO can benefit your website:

Increased organic traffic: By improving your website’s crawl accessibility and indexability, you can make your website more visible to search engines, which can lead to more organic traffic from potential customers.

Improved user experience: A website with a clear and well-structured hierarchy, fast loading times, and a mobile-friendly design is more likely to keep users engaged and satisfied, which can lead to higher conversion rates and customer loyalty.

Enhanced brand perception: A technically sound website reflects positively on your brand, demonstrating professionalism and attention to detail, which can attract more customers and partners.

Reduced technical issues: By addressing technical issues proactively, you can prevent website downtime, broken links, and other problems that can frustrate users and negatively impact your SEO efforts.

Overall, technical SEO plays a crucial role in ensuring your website’s success in search engines and providing a positive user experience. By investing in technical SEO, you can improve your website’s visibility, attract more visitors, and achieve your online business goals.

Crawling, Rendering and Indexing

In order for your website to rank well in SERP (search engine result page), it needs to be crawlable, and indexable. Crawlability and indexability are the foundations of SEO, and without them, your website is not able to appear in search results. Ranking is the ultimate goal of SEO, and it is achieved by optimizing your website for the factors that search engines consider important.

Crawlability is the ability of search engine crawlers, such as Googlebot, to access and understand the content of a website. It is an important factor in search engine optimization (SEO) because it determines whether and how a search engine will index a website’s pages. If a website is not crawlable, it will not appear in search engine results pages (SERPs).

Crawlability is the first step in optimizing your website for technical SEO.

1.Create Clear, SEO-Friendly Website Architecture:

Site structure is the way how pages are linked within a website. The best structure is to organize your website’s pages in a hierarchical manner, with a clear parent-child relationship between pages and help Googlebot to understand relationship between pages and not left any orphan page.

2.Submit Your Sitemap to Google:

A sitemap is an XML file that provides a comprehensive list of all the URLs which you want to be indexed on your website. Using sitemap helps Google find your webpages. Once you locate your sitemap, submit it to Google via Google Search Console account.

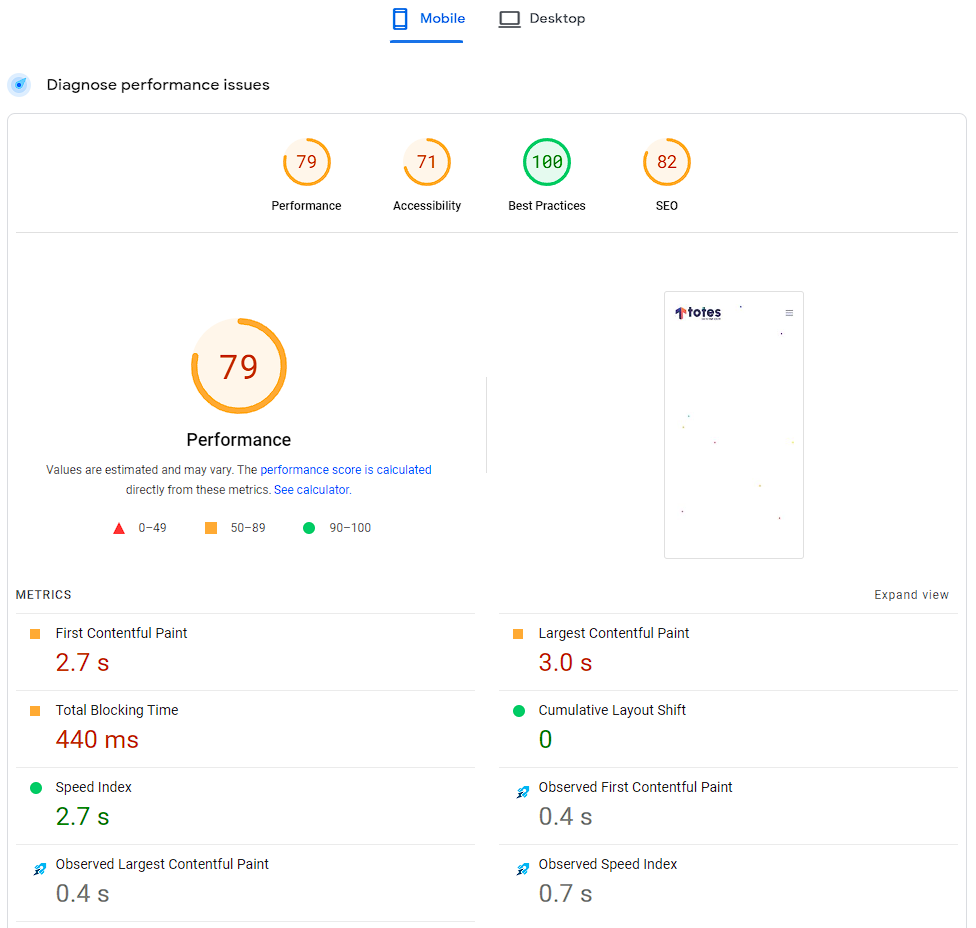

3. Optimize Website Speed and Performance

Slow page loading times can negatively impact a website’s crawlability. Webpage cannot be crawled unless it loads fully before a certain time. Before getting started optimization, you may consider using Google Pagespeed Insights Tool.

4. Use Robots.txt Effectively

Robots.txt is a simple text file located on your root domain which has a purpose to instruct search engine crawlers and robots how to crawl pages on your website. It’s part of REP (robots exclusion protocol) and it tells whether user agents can or cannot crawl the specific parts of a website.

Below robots.txt command prevents search engines crawling whole website:

User-agent: *

Disallow: /

How to disallow specific path:

User-agent: *

Disallow: /wp-admin/

How to disallow specific bots:

User-agent: Bingbot

Disallow: /

5. Mobile-Friendly Design:

With the increasing importance of mobile search, ensure your website is optimized for mobile devices. Search engines favor mobile-friendly sites, and a responsive design improves the user experience and crawling efficiency. So, the experience you offer on the desktop website is as important as the one on mobile.

You may use Google Mobile-Friendly Test tool to check the issues on mobile. (p.s. This tool will be retired on December 1, 2023.)

6. Optimize Internal Linking Strategy

In order to increase each page’s visibility among a website, you need to set internal linking properly. Especially if the number of pages on your website is over 10000, creating a comprehensive internal linking strategy is one of the most important technical SEO developments to make the website crawlable.

7. Fix Redirect and Broken Links

Google recommends to use 200-status code final URLs which you want to be indexed on SERP. Regularly check for and fix broken links, as they can hinder crawlers’ ability to navigate your site. Implement proper redirects when necessary, using 301 redirects for permanent page changes and 302 redirects for temporary redirects.

Indexability: Once Googlebot completes crawl step, it starts to index your website. Then search engine stores the pieces of content in its search index.

1. Noindex Tag

“noindex” tag is an HTML snippet that keeps your pages out of Google’s index and placed within the <head> section of your webpage.

You should use the “noindex” tag only when you want to exclude certain pages from indexing like “thank you” page.

2. Canonical Tag

Canonical tag is an HTML element that helps search engines identify the preferred version of a web page when there are multiple versions with similar or identical content. It is used to prevent duplicate content issues and ensure that search engines index the most relevant and authoritative version of a page.

<link rel=“canonical” href=“https://example.com/canonical-url”>

Canonical tag serves some important use cases:

Prevent Duplicate Content Issues: Duplicate content occurs when the same or very similar content appears on multiple URLs. This can confuse search engines and lead to ranking problems. Canonical tags help to consolidate link equity and ensure that search engines index the preferred version of a page, avoiding duplicate content penalties.

Improve Search Engine Rankings: By indicating the canonical version of a page, canonical tags help search engines understand which version of the content is the most authoritative and relevant. This can lead to improved search engine rankings for the canonical page.

Enhance User Experience: Canonical tags can help direct users to the most relevant and up-to-date version of a page, improving the overall user experience.